What is Computer Vision?

- Engineering

- KI & Daten

This is the first post in our computer vision blog series. The main purpose of this series is to cover recent advances in computer vision, to write about our own R&D in computer vision, and in general to make “AI powered” computer vision more accessible to the general public because it really isn’t magic.

Therefore, our first entry will explain what computer vision is, focusing on the most in-demand task of computer vision applications – object detection. But first, let’s do some groundwork and build our understanding.

What’s computer vision?

Computer vision deals with automated techniques for extracting information from images or videos. The goal of computer vision, from the perspective of engineering, is to emulate the human vision system. Thus, computer vision is a sub-field of artificial intelligence.

Computer vision includes tasks such as image classification, object detection, activity recognition, pose estimation, object tracking, and motion estimation, amongst others. Real-world applications include face recognition for logging into smart devices, visual navigation systems in autonomous vehicles, and tumour detection in medical images [1].

Modern computer vision methods are mostly developed using deep learning, a sub-field of machine learning. Such methods require an extensive set of annotated real-world data, model development and training. These deep learning-based computer vision methods are responsible for most advances in computer vision in the last decade [2].

Image Classification

Image classification is the task of assigning an image with a category label from a set of predefined labels based on the contents of the image. This is considered one of the core problems in computer vision due to the large number of applications it has.

An example of an image classification task would be to classify images with a cat in them to belong to the “cat” category, and images with a dog in them to belong to the “dog” category. How image classifiers work, however, is beyond the scope of this blog post, but worry not, we’ll get to that in part 3 of this series.

Challenges

However, if there is a cat and a dog in the same image, the above example scenario will obviously fail unless a new category, such as, “both” is introduced. Another point worth mentioning is that the image classification system has no semantic understanding of what a cat or a dog is. The reason behind this is that the computer doesn’t see images the same way us humans do.

So, as illustrated in Figure 2, instead of seeing a cat, the computer sees a bunch of numbers in a table form called the matrix. Each number in the matrix corresponds to a specific pixel in the image. The dimensionality of the matrix is determined by the image; a colour image is a matrix of size width x height x 3, as colour images are made up of three colour channels – red, green, blue – commonly referred to as RGB. Grayscale – or black-and-white – images are made up of only one channel, so the resulting matrix is of size width x height.

The way the computer sees images presents several challenges, however:

- Viewpoint variation: The same instance of an object can be captured from different angles.

- Scale variation: Objects belonging to the same class often vary in scale.

- Deformation: Many objects can be deformed or change shape, even in extreme ways.

- Occlusion: Objects are not always necessarily fully visible.

- Illumination conditions: The amount of illumination changes pixel values.

- Background clutter: Objects may blend into the surrounding environment, thus making them hard to detect.

- Intra-class variation. Objects within the same class can vary broadly in terms of appearance.

The reason why these are challenges is that they all change the matrix representation of the image and, especially, the instance of the object in the image that the computer sees. This, as mentioned earlier, is because the matrix representation of an image is nothing but a 2D or 3D (grayscale or RGB) matrix of brightness values.

Another common challenge that we didn’t mention yet is dependent on the granularity of the classification task. Say, if it’s not enough to classify an image either as “cat” or “dog” but with a specific breed of the respective species, then we are faced with the challenge of granularity, as illustrated in Figure 4. The more granular the classification task is, the more challenging the task of classifying an image becomes, at least in most cases.

How it works

Assuming that we have enough of the right kind of data, for example, images of cats and dogs that are in their respective labels. We split the data into what are called the training set and the testing set. Then, we create an image classifier and train it. During training, the model (i.e the classifier) only sees images from the training set and tries to predict which category each image belongs to (e.g. 0 for cat and 1 for dog). However, the output isn’t actually 0 or 1, but a continuous number between 0 and 1. If the classifier outputs 0.5 for an image, it basically is a random guess, that is, the model doesn’t know if there’s a cat or a dog in the image. Once training is done, we test the classifier using the testing set, which contains images unseen before by the model. This is done in order to determine how well the model generalises to previously unseen data.

Now that we’re familiar with image classification and its challenges, we can move on to object detection, which builds upon image classification (quite literally actually, but we’ll get back to that later on in this series).

Object Detection

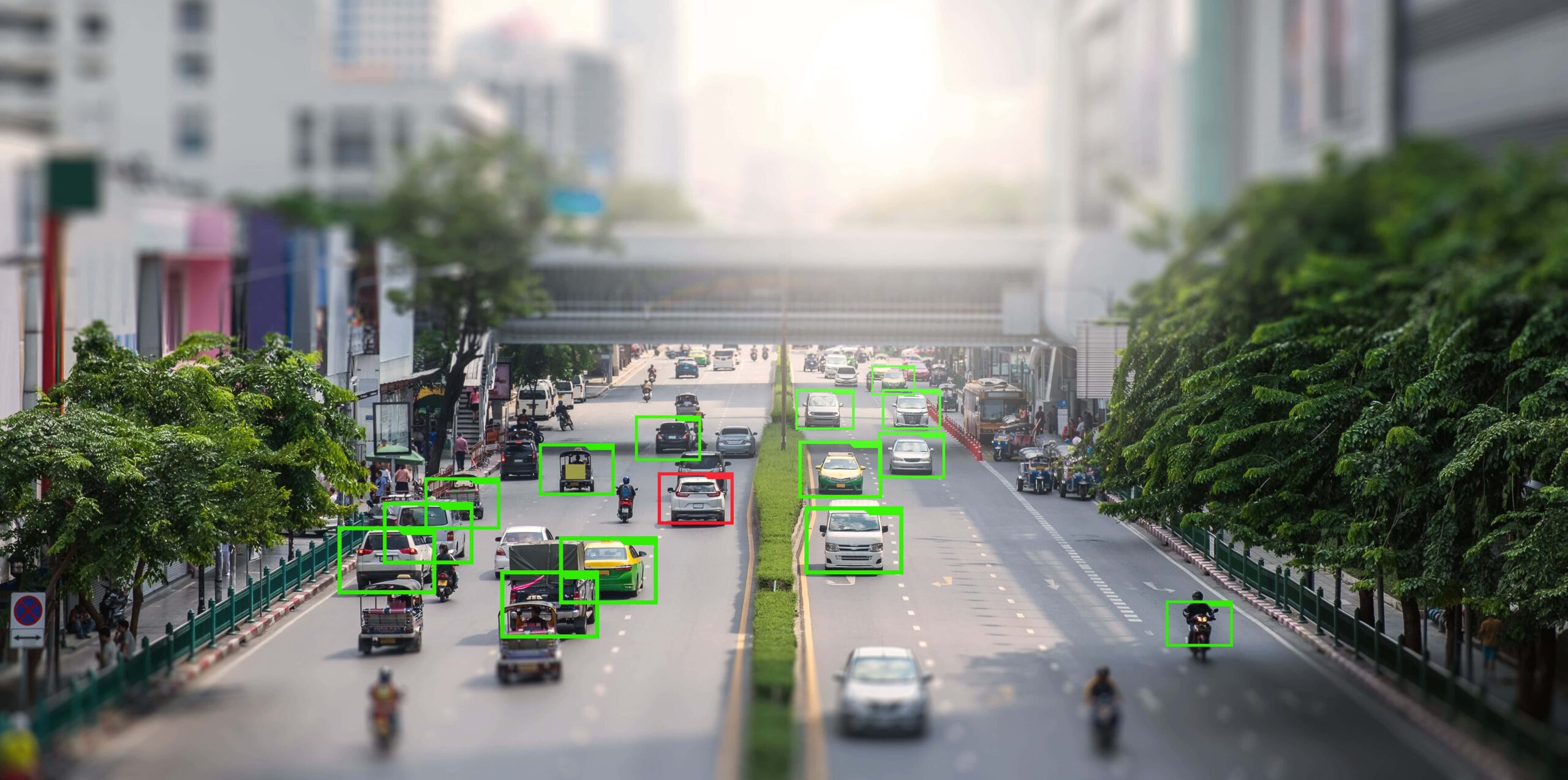

Object detection is the task of locating and classifying semantic instances of objects of a particular class in an image. So, whereas our image classifier would simply output a class label for a given image, our object detector would output the coordinates (referred to as the bounding box) and their corresponding class labels for the objects in a given image.

Challenges

We already know that the computer sees an image as a matrix, and we also know the challenges that arise from the matrix representation of an image. Object detection shares these challenges with image classification, and on top, has its own unique challenges. Because of course it does.

The challenges in object detection are:

- Multiple outputs: Need to output a variable number of predictions as the number of objects in an image isn’t constant.

- Multiple types of output: Need to not only predict ”what” (category label) but also “where” (bounding box)

- Large images: Detection requires higher resolution images because otherwise objects are small and pixelated, and thus have a poor matrix representation; this makes object detection a computationally heavy task.

How it works

Now, object detection might seem like quite the leap from image classification, which kind of is true, but not really. One way to look at object detection is that it’s nothing more than image classification on sub-images in a given image, where each sub-image is defined by its coordinates, that is, the bounding box. Basically, the object detector locates an instance of an object in the image, crops the instance based on the bounding box and runs it through an image classifier. The result of this is illustrated in Figure 5.

There are two approaches to object detection, which are two-stage methods and one-stage methods. One-stage methods prioritise inference speed, that is, the speed at which the objects in a given image are located and classified; two-stage methods prioritise prediction accuracy, that is, they don’t care about inference speed. That being said, we’ll get into the details of object detection in part 4.

What’s next?

Now that we have a conceptual understanding of computer vision, especially image classification and object detection, and most importantly, how the computer sees images, we can move on to the theory of computer vision algorithms. However, we’ll focus on machine learning based algorithms. In the next post, part 2, we’ll cover convolutional neural networks, the foundational building block of modern computer vision applications.

Sources:

[1] https://www.mathworks.com/discovery/computer-vision.html?s_tid=srchtitle_computer%20vision_3

[2] https://www.ibm.com/topics/computer-vision

[3] https://cs231n.github.io/classification/

[4] https://web.eecs.umich.edu/~justincj/slides/eecs498/498_FA2019_lecture02.pdf

[5] https://web.eecs.umich.edu/~justincj/slides/eecs498/498_FA2019_lecture15.pdf

Über den Autor

Phillip Schulte