Challenges of Occlusion and Poor Visibility in Object Detection

- Plattform

Object detection, a fundamental task in computer vision, has witnessed substantial progress in the deep learning era. However, the challenges presented by occlusion and poor visibility conditions remain unsolved. This blog post explores the nature of these challenges by shedding light on how the inherent limitations of computer vision, rooted in the numeric representation of pixels in digital images, pose challenges for deep learning-based object detectors. As a practical example, we look into how occlusion and poor visibility affect traffic sign detection, and what can be done to address these issues.

Occlusion and its Impact on Digital Images

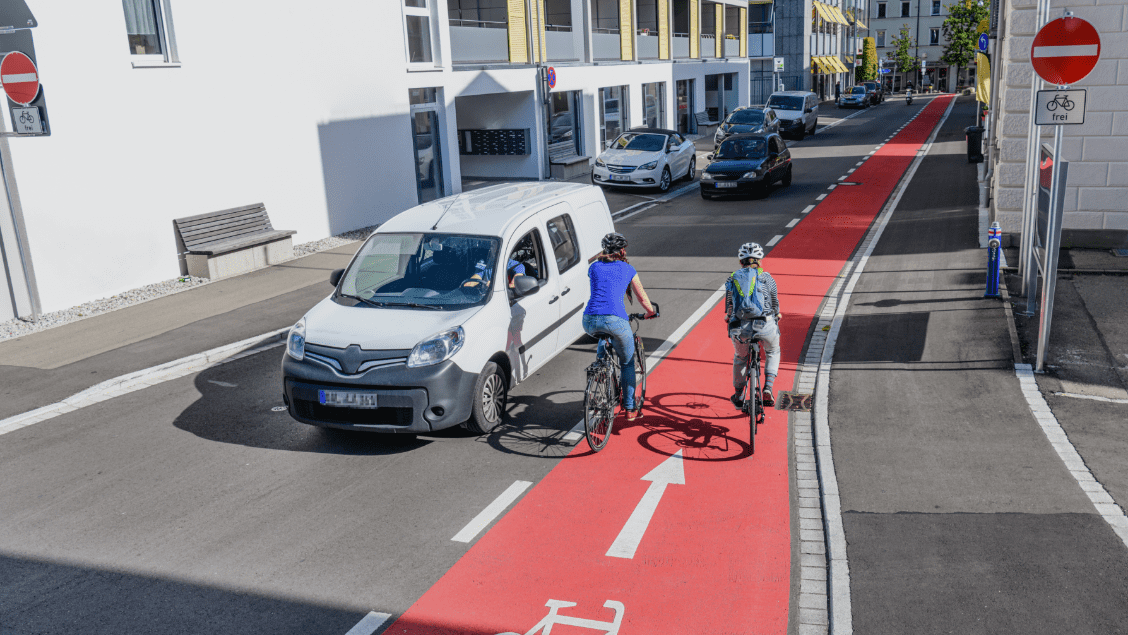

Occlusion, which is the partial or complete obstruction of an object in an image, introduces complexity by altering the visual information encoded by the pixels of the image. In traffic sign detection, occlusion can take various forms, such as stickers or graffiti on signs, or external objects like tree branches partially blocking the view. From the point of view of computer vision, interference by occlusion directly impacts the pixel values. That is because the pixels corresponding to the obstructed region distort the true representation of the object, meaning that the pixel values in the obstructed region do not match the true pixel values of the object in that region

Poor Visibility Conditions

Poor visibility conditions, including fog, rain, extreme darkness and brightness, amplify the challenges of object detection. Fog, for instance, scatters light and reduces contrast, leading to a haze that influences the pixel values across the image. The reason behind this is quite simple; each pixel is actually a brightness value, meaning that it represents how much light that pixel registers, so to say. Rain can introduce streaks and distortions on the camera lens, thus distorting the brightness values, for example. Extreme darkness and brightness, on the other hand, result in underexposed or overexposed regions, affecting the overall image quality and visibility of objects. Therefore, poor visibility conditions introduce noise and distortion that corrupt the pixel values, making it challenging to detect traffic signs, just like it is challenging for us humans to see clearly in similar conditions.

Challenges from a Computer Vision Perspective

Now that we have established a basic understanding of how the computer “sees” images (or rather how the computer “sees” visual data), we arrive at the crux of the problem, which is how convolutional neural networks (CNNs) work. CNNs are essentially just pattern recognition algorithms that aim to learn hierarchical representations of features from the input data (in our case images) to make predictions. However, occlusion disrupts these learned patterns by obscuring crucial features, and the altered brightness values resulting from poor visibility conditions further hamper the pattern recognition process, as CNNs struggle to learn meaningful features in the presence of corrupted pixel values. It is important to note that this problem is not only limited to deep learning methods for computer vision (such as CNNs), but to all computer vision methods, both old and new. So, that is the crux of the problem; there is nothing we can do about how digital images are represented, but there is a lot we can do about the methods used to process said images.

Mitigating Challenges

Addressing the challenges of occlusion and poor visibility conditions requires innovative approaches to enhance the robustness of object detection systems, especially in the context of CNNs that need to work well in real-life scenarios. In this section we list the three most common approaches currently employed to mitigate the said challenges.

1. Image Augmentation:

Image augmentation stands out as a prevalent technique to tackle the challenges of occlusion and poor visibility. By artificially introducing variations in brightness, occlusion, and weather conditions during the training process, through various image processing techniques, models are exposed to a more diverse range of scenarios. This helps them generalise better to real-world conditions. However, designing and implementing good image augmentation strategies is not only time consuming, but it is also a roundabout way of addressing the underlying problems. That being said, there is absolutely nothing wrong with image augmentations and it is highly recommended, but it is like treating the symptoms and not the cause.

In the case of traffic sign detection, image augmentation could involve simulating scenarios where stickers partially cover signs or where visibility is compromised due to adverse weather conditions. Training models on augmented datasets helps them learn to adapt to variations in brightness values and improves their resilience to occlusion.

2. Transfer Learning:

Transfer learning is another strategy to mitigate challenges by leveraging pre-trained models on large datasets. By fine-tuning these models on specific tasks like traffic sign detection, the networks can inherit features that are less sensitive to occlusion and variations in brightness values. Transfer learning enables models to capitalise on knowledge gained from diverse datasets and enhances their ability to handle challenging conditions. While transfer learning is widely used in practice and also highly recommended (although not necessarily in all cases), even the-pretrained models suffer from the same challenges, not to mention that they might not always learn the most useful features.

For instance, a pre-trained model might have learned to recognize certain features of a stop sign, and this knowledge can be fine-tuned to improve performance in scenarios where parts of the sign are occluded.

3. Ensemble Methods:

Ensemble methods (or ensemble learning) involve combining predictions from multiple models, and offer a robust approach to handling occlusion and poor visibility. By aggregating predictions from diverse models, ensembles compensate for the limitations of individual models and improve overall detection performance. There are different ways of doing ensemble learning, such as a mixture of experts, where each model is an expert on a subset of traffic signs, for example. Ensemble learning has obvious computational limitations, and it also takes a lot of time to design and implement several different models, or to train different instances of the same model with different variations of input data, and so on.

In the context of traffic sign detection, an ensemble of models may excel in scenarios where one model struggles due to occlusion, as the combined predictions provide a more comprehensive understanding of the scene.

Future Research Directions

While current approaches – including but not limited to the ones mentioned above – to mitigate the challenges have been proven to work in practice, there is still plenty of room for research that aims to treat the cause rather than the symptoms. In this section we highlight a couple of different research topics that we are working on in the context of our traffic sign detection research project, DeepStreet-M, which is funded by the German Federal Ministry for Digital and Transport (mFUND).

1. Context-aware Object Detection:

The goal of context-aware object detection is not only to detect objects in an image but also to consider the surrounding context (spatial and semantic) to improve detection accuracy. Spatial context refers to the consideration of the spatial relationships between objects in an image; e.g. a traffic sign is most likely to be located on the side of the road rather than in the middle of the road surface, whereas semantic context refers to the semantic relationships between objects; e.g. traffic signs are usually mounted to sign posts.

Context-aware object detection can potentially enable models to make better informed predictions in the presence of noise by understanding the spatial and semantic relationships between objects in the scene and discerning the significance of obscured features.

2. Capsule Networks:

Capsule networks aim to learn more robust representations by taking into account part-whole hierarchies and instantiation parameters of objects. Part-whole hierarchy refers to the spatial relationship between an object as a whole and its parts, and instantiation parameters refer to object pose, texture and other descriptive features. Such robust representations are particularly useful when dealing with occlusion; e.g. a model is able to correctly detect an occluded traffic sign just from its visible parts, as the model has learned the whole-part hierarchy of the object.

Capsule networks can potentially improve classification performance by considering the spatial hierarchies and instantiation parameters of objects, and therefore have a better “understanding” of the physical limitations of objects (such as the nose cannot be on the forehead). This is an infamous shortcoming of traditional CNNs as they are not able to learn such spatial hierarchy information.

3. Enhanced Image Processing for Representation Learning

In the context of representation learning, image processing involves applying specific techniques to the input images at the early stages of the model. The goal is to make the model learn how to improve the quality of the input images by mitigating the effects of poor visibility conditions, and thereby to enable the learning of clearer representations. This can be achieved, for example, by integrating image processing techniques such as dehazing, colour balancing and denoising into the model and letting the model learn the best way to utilise the techniques, so to say.

Image processing techniques can significantly improve the performance of object detection models operating in poor visibility environments by enhancing object boundaries and improving image quality consistency across different environments. Sharper object boundaries and consistent image quality are valuable for representation learning, as they enable the model to focus on object-related patterns.

Conclusion

The challenges of occlusion and poor visibility conditions in object detection, amplified by the limitations of CNNs, highlight the need for research and innovative solutions. We at P&M are addressing these fundamental challenges in the context of our R&D project by focusing on the approaches highlighted in the last section. Our aim is to develop methods that directly mitigate (and ideally fully remove) the inherent limitations of CNNS as outlined in the first half of this blog post.